Workplace title promotions

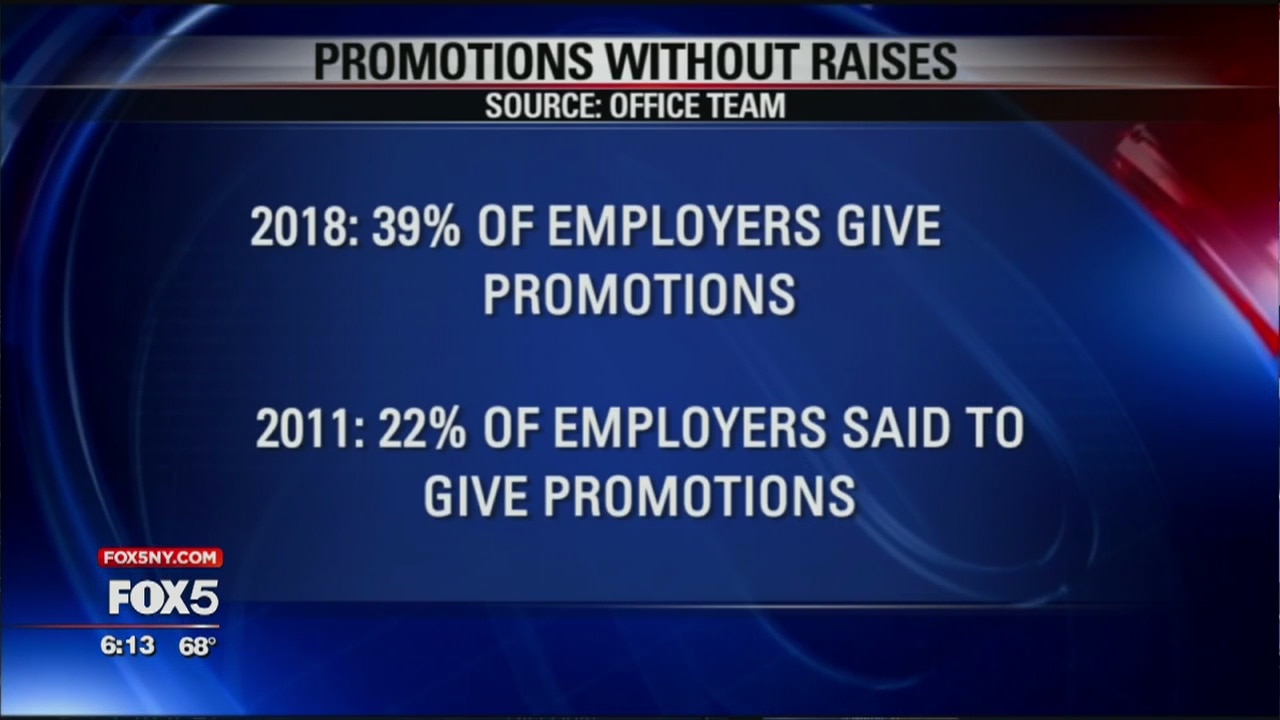

Getting a promotion at work is usually something to be happy about. A new job title usually means more money, but sometimes that's not the case. What if you were offered a promotion without a pay raise? A recent study found that bosses are offering their employees promotions without more money at nearly twice the rate they did seven years ago.